The pitfalls of chasing statistical significance: Don’t add more data to make your results significant!

Dr. Vanessa Cave

27 July 2022

Recently I was reviewing a scientific publication and a certain segment in the Methods section caused me to sweat. In brief, the authors had performed an analysis on a data set with n observations. After discovering an interesting but non-significant effect, they had added a further m observations to their data set and re-analysed it to “increase the power”.

Before I delve in to why adding more data to achieve significance is an issue, firstly I would like to commend the authors on providing a complete description of their methods, and not glossing over important details such as the one above. Only by reporting our methodology accurately and completely can our research be properly scrutinized and independently reproduced, and our findings appropriately interpreted.

The issue with adding more data for significance

So, what’s the problem with adding more data to achieve statistical significance?

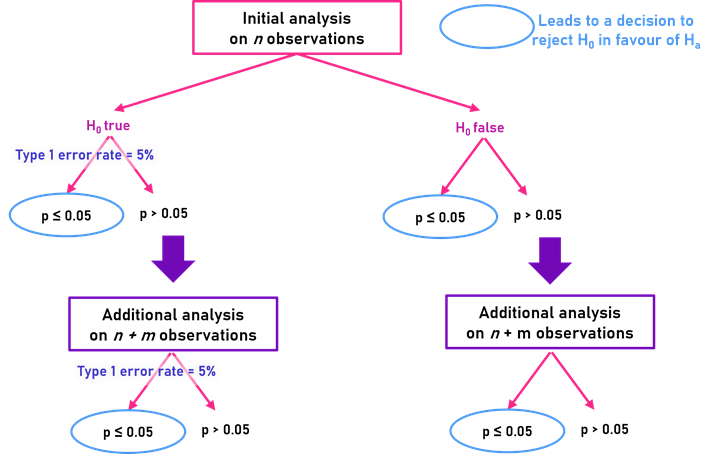

Well… in doing so you increase the probability of obtaining a significant effect by random chance. In other words, you increase the chance of a type I error (i.e., rejecting the null hypothesis when in fact it is true).

Understanding the impact of conditional data addition

At the nub of the issue is the decision to add more data based on a known outcome. In particular, the addition of the m extra observations is conditional on the results from the analysis of the first n observations. You can read more about this in Professor James Curran’s excellent StatsChat blog entitled “Just one more…”. This blog illustrates the issue very nicely using a coin toss example and a horse racing analogy.

Examining the dangers: A simple example

Say we’re interested in comparing two means: and . We construct an appropriate statistical test based on the following null () and alternative () hypotheses:

and assess the strength of evidence against the null hypothesis, as provided by our observed data, using a p-value. For simplicity, let’s assume a significance level (or type I error rate) of 5%. Our p-value is either:

- less than or equal to 5% (in which case we can reject the null hypothesis in favour of the alternative hypothesis and conclude that there is a difference between the two means)

or - greater than 5% (in which case we have insufficient evidence to reject the null hypothesis).

Under such a testing scenario, the probability that we reject the null hypothesis when in fact it is true is 5%. That is, there is a 5% probability of accepting the alternative hypothesis when the results can be attributed to chance.

As the authors I mention above have done, let’s “change the rules” of our test by adding some more data if the difference in means is promisingly large (or analogously, the p-value is small, but not quite small enough), and then having another go at rejecting the null hypothesis. Crucially, in doing so the probability that we erroneously reject the null hypothesis is no longer 5% - in fact, it is larger!

Intuitively, we can think of this as accumulating debt from our earlier test. Regardless of what happens next, there was a 5% probability of rejecting the null hypothesis by chance in the first analysis. And conversely, a 95% probability that the null hypotheses wasn’t rejected given that it is true. Adding more data, and re-analysing it doesn’t change these probabilities; we have already incurred a 5% type I error rate from the first analysis and this debt can’t be forgotten.

Why sequentially adding data is problematic

It’s perhaps more obvious to appreciate why sequentially adding more data until finally we have a data set that enables us to reject the null hypothesis is such a very bad thing to do. However, why adding a little bit more data to “give our analysis more power” is so wrong is possibly harder to wrap our heads around. But ultimately, it boils down to adding more data because we haven’t got the result we want. Hardly a fair approach!

So, what should have the authors done? Better approaches for robust results

Using the information from the initial analysis, the authors can conduct a power analysis to determine the sample size required to detect the effect of interest. Based on this sample size, the authors should then collect independent data (i.e., perform another experiment) and use this new data to test their hypothesis independently of the first data set.

Alternatively, the authors could have designed a priori a sequential analysis trial. Such an approach involves adjusting the significance level at each round of testing so that the overall type 1 error rate remains at the desired level. For example, for an overall type 1 error rate of 5%, and a maximum of two testing rounds, each test should be conducted at the 2.94% significance level (according to Pocock’s boundary). Sequential analysis is popular in clinical trials where, for ethical reasons, researchers want the option of stopping the trial early if the results from an initial group of patients provides the evidence they seek.

About the author

Dr. Vanessa Cave is an applied statistician interested in the application of statistics to the biosciences, in particular agriculture and ecology, and is a developer of the Genstat statistical software package. She has over 15 years of experience collaborating with scientists, using statistics to solve real-world problems. Vanessa provides expertise on experiment and survey design, data collection and management, statistical analysis, and the interpretation of statistical findings. Her interests include statistical consultancy, mixed models, multivariate methods, statistical ecology, statistical graphics and data visualisation, and the statistical challenges related to digital agriculture.

Vanessa is currently President of the Australasian Region of the International Biometric Society, past-President of the New Zealand Statistical Association, an Associate Editor for the Agronomy Journal, on the Editorial Board of The New Zealand Veterinary Journal and an honorary academic at the University of Auckland. She has a PhD in statistics from the University of St Andrew.

Popular

Related Reads