The power of confidence intervals: beyond p-values

Dr. Vanessa Cave

07 June 2021

As an applied statistician working on team research projects, on occasion I’ve battled the misguided view that my key contribution is “rubber stamping” p-values onto research output, and not just any p-value, ideally ones less than 0.05! I don’t dislike p-values, in fact, when used appropriately, I believe they play an important role in inferential statistics. However, simply reporting a p-value is of little value: we also need to understand the strength and direction of the effect and the uncertainty in its estimate. That is: I want to see the confidence interval (CI). Let me explain why.

Beyond p-values: the missing piece

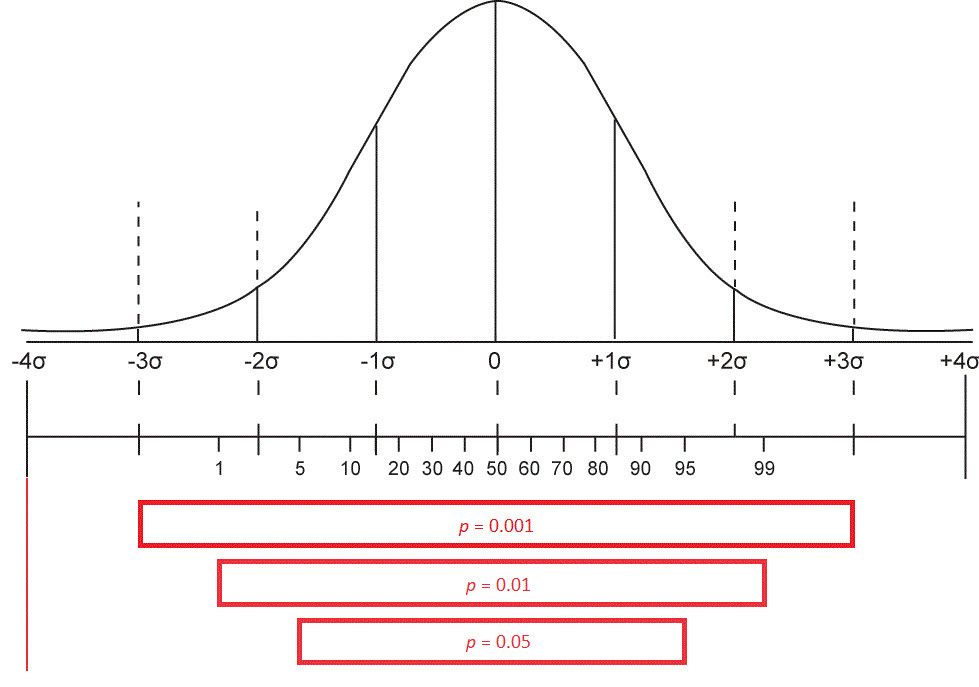

P-values are used to assess whether there is sufficient evidence of an effect. More formally, they provide a measure of strength against the null hypothesis (of no effect). The smaller the p-value, the stronger the evidence for rejecting the null hypothesis in favour of an alternative hypothesis. However, the p-value does not convey any information on the size of the effect or the precision of its estimate. This missing information is very important, and it is here that CIs are extremely helpful.

A confidence interval provides a range of plausible values within which we can be reasonably confident the true effect actually falls. |

Let’s look at an example.

From meat to meaning: interpreting practical differences

A few years ago I was involved in a research programme studying the effect a particular treatment had on the pH of meat. pH is important because it impacts the juiciness, tenderness, and the taste of meat. If we could manage pH, we could improve the eating quality. A randomised controlled experiment was conducted, the data analysed, and tadah! There was a statistically significant difference between the treatment and control means (p-value = 0.011).

- which mean is lower?

- a lower pH is better

- how big is the difference?

- a small difference in pH, such as less than 0.1, won’t result in a practical difference in eating quality for consumers

- how precise is the estimated size of the effect?

- having enough precision is critical for meat producers to make an informed decision about the usefulness of the treatment

A CI will give us this information.

In our example, the 95% CI for the difference between the control and treatment mean is:

0.013 ≤ μcontrol – μtreatment ≤ 0.098

Telling us that:

- the mean pH for the treatment is indeed statistically lower than for the control

- the 95% CI is narrow (i.e., the estimate of the effect is precise enough to be useful)

but …

- the effect size is likely too small to make any real-world practical difference in meat eating quality.

The power of inference: conclusions drawn from CIs

As the above example illustrates, CIs enable us to draw conclusions about the direction and strength of the effect and the uncertainty of its estimation, whereas, p-values are useful for indicating the strength of evidence against the null hypothesis. Hence, CIs are important for understanding the practical or biological importance of the estimated effect.

About the author

Dr Vanessa Cave is an applied statistician interested in the application of statistics to the biosciences, in particular agriculture and ecology, and is a developer of the Genstat statistical software package. She has over 15 years of experience collaborating with scientists, using statistics to solve real-world problems. Vanessa provides expertise on experiment and survey design, data collection and management, statistical analysis, and the interpretation of statistical findings. Her interests include statistical consultancy, mixed models, multivariate methods, statistical ecology, statistical graphics and data visualisation, and the statistical challenges related to digital agriculture.

Vanessa is currently President of the Australasian Region of the International Biometric Society, past-President of the New Zealand Statistical Association, an Associate Editor for the Agronomy Journal, on the Editorial Board of The New Zealand Veterinary Journal and an honorary academic at the University of Auckland. She has a PhD in statistics from the University of St Andrew.

Popular

Related Reads